Image 1 of 6

Image 1 of 6

Image 2 of 6

Image 2 of 6

Image 3 of 6

Image 3 of 6

Image 4 of 6

Image 4 of 6

Image 5 of 6

Image 5 of 6

Image 6 of 6

Image 6 of 6

Roysdon AI

Overview

268 From-Scratch Implementations in MATLAB, Python, and R — Companion to the Textbook

Visit this page for more details, better yet, get the book!

Build machine learning from first principles — without black boxes

Most people learn machine learning in one of two ways: they read equations in papers, or they call libraries in code. What’s missing is the bridge. The Roysdon AI Toolbox is the companion software suite to A Comprehensive Approach to Data Science, Machine Learning and AI. It contains 268 runnable implementations across MATLAB, Python, and R, designed to close the gap between:

Equations → Algorithms (turn objectives and update rules into step-by-step procedures)

Algorithms → Code (map each step into correct, minimal implementations)

Code → Intuition (see how knobs, failure modes, and data geometry actually change behavior)

This toolbox is built for readers who want more than API fluency. It is for engineers, researchers, and practitioners who want mechanical understanding—the kind that lets you debug, modify, extend, and trust what you deploy.

What you get

A complete “from-scratch” companion to a full ML curriculum

The toolbox mirrors the book’s end-to-end learning path across 57 chapters, spanning:

Preliminaries & foundations (probability, optimization, linear algebra, feature engineering)

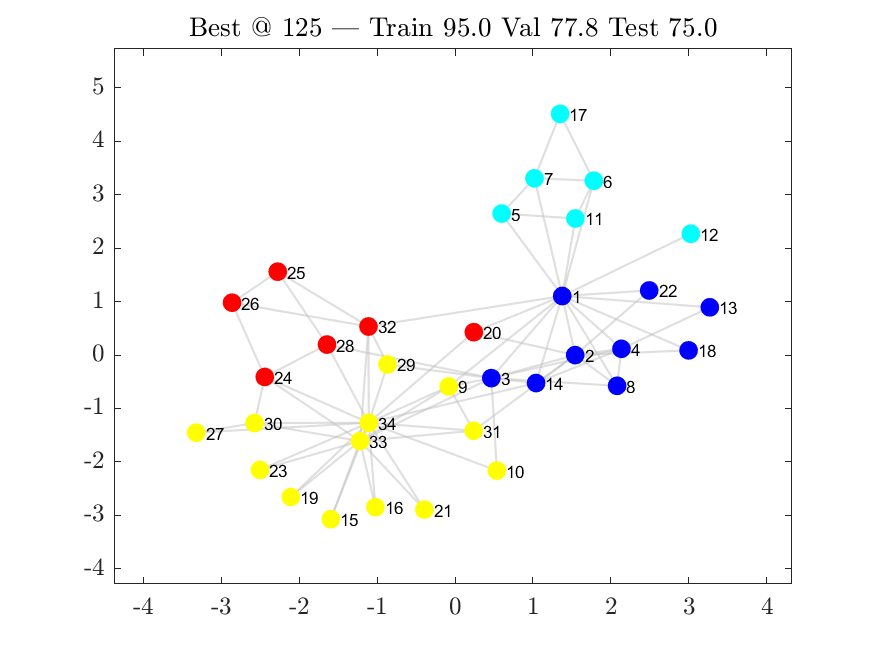

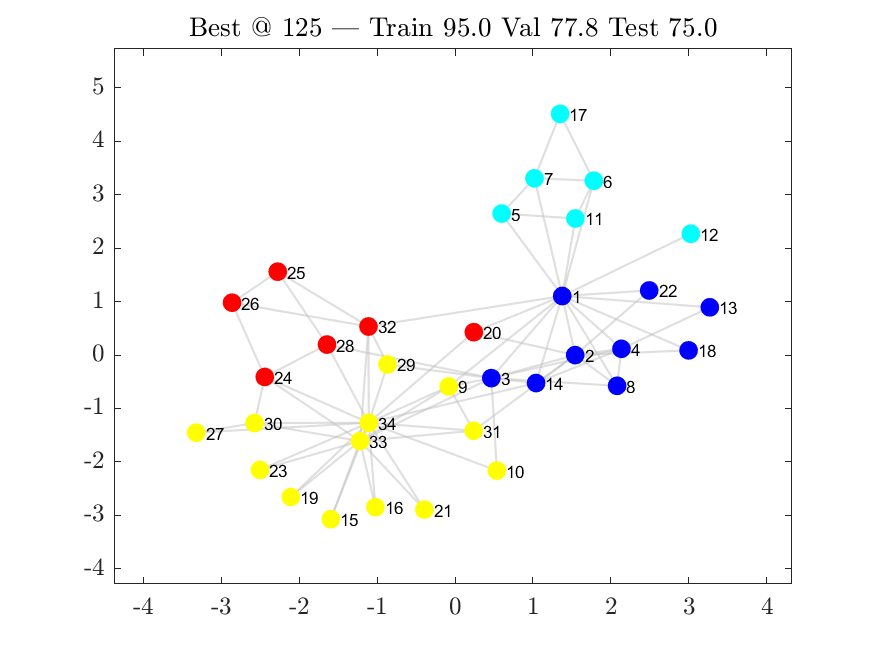

Dimension reduction (PCA, SVD, manifold methods, LDA)

Unsupervised learning (k-Means, KDE, DBSCAN, EM/GMMs)

Supervised learning

Classification (trees, forests, boosting, logistic regression, SVMs, KNN, Naive Bayes)

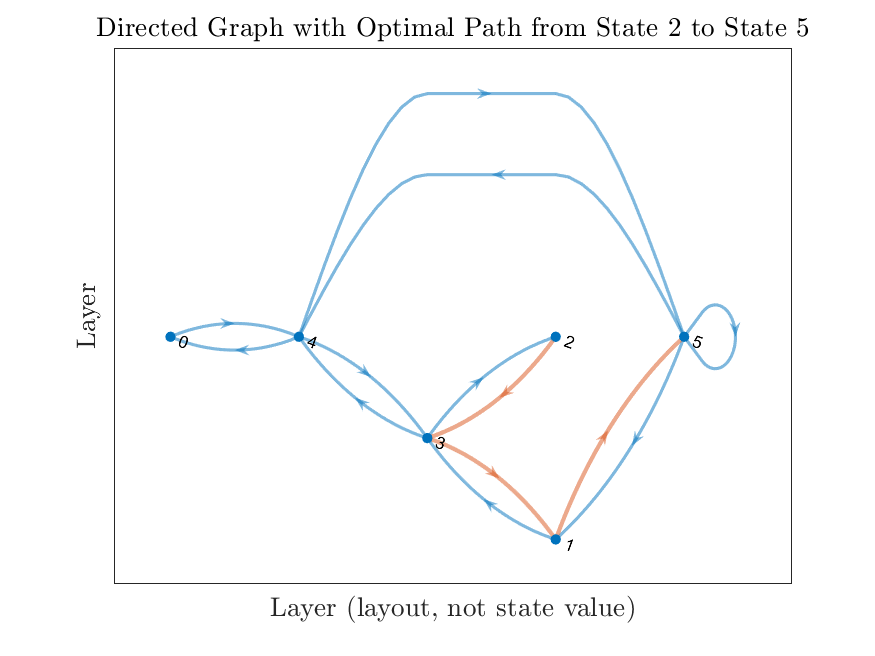

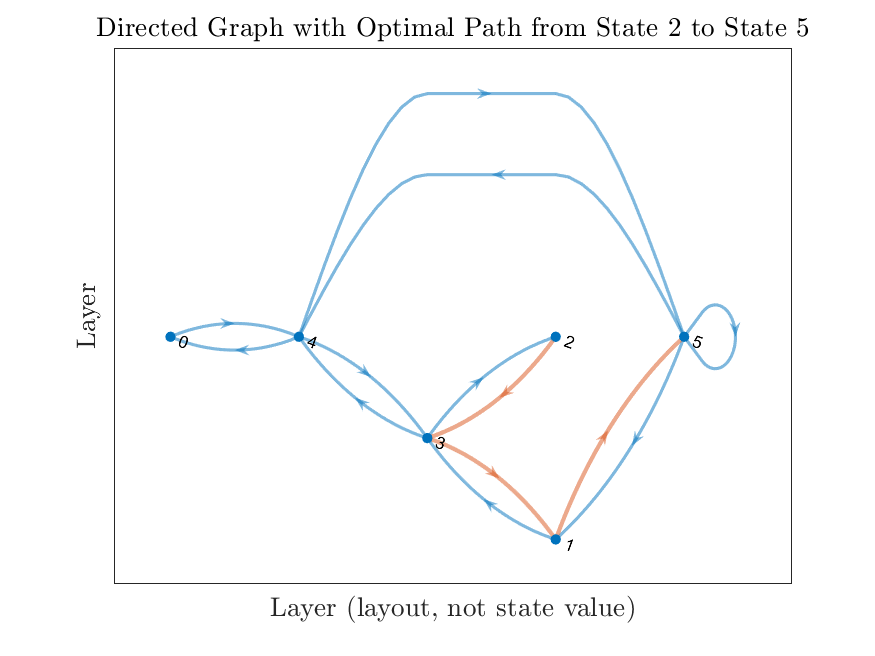

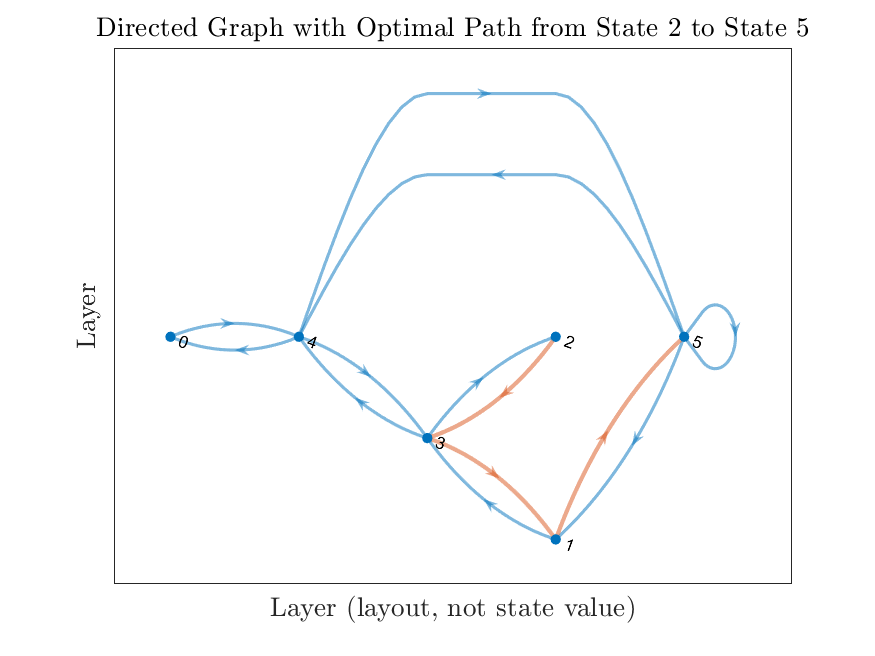

Regression (linear regression plus optimization-based methods like LP/MILP and graph/network optimization)

Optimal filtering & estimation (MLE, EKF, and advanced estimator architectures)

Deep learning

Foundations (vanilla neural networks)

Sequence models (RNN, LSTM, Transformers)

Graphical models (CNN, GCN)

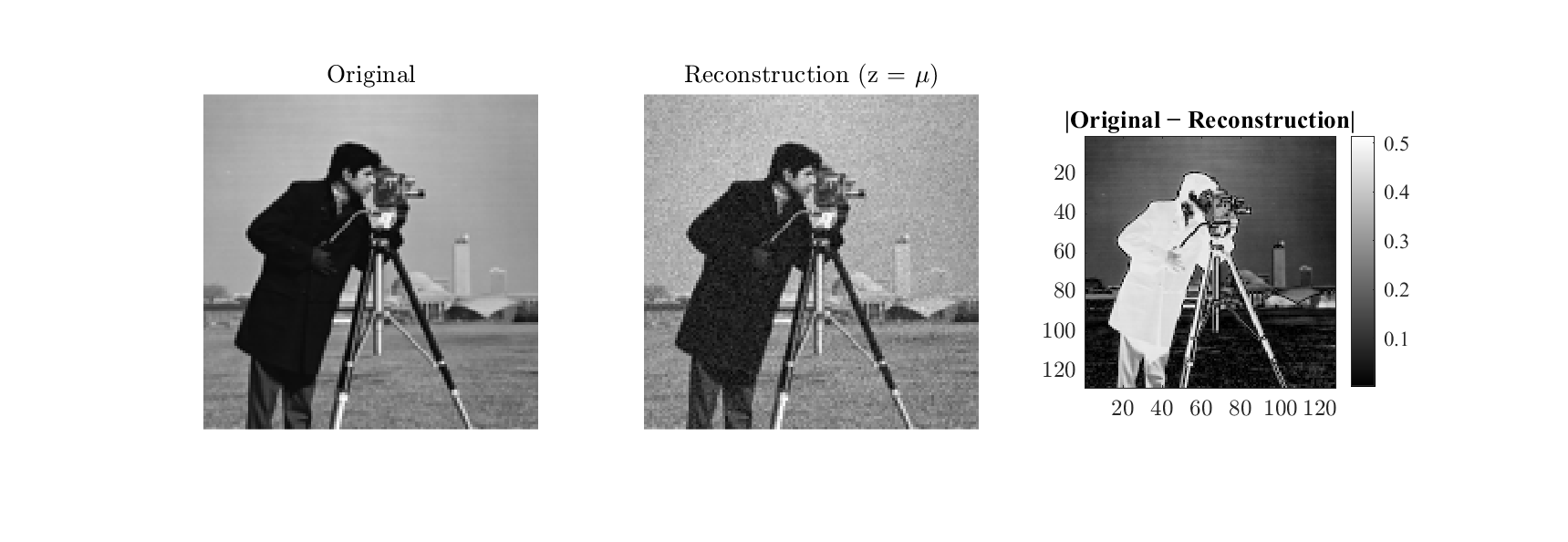

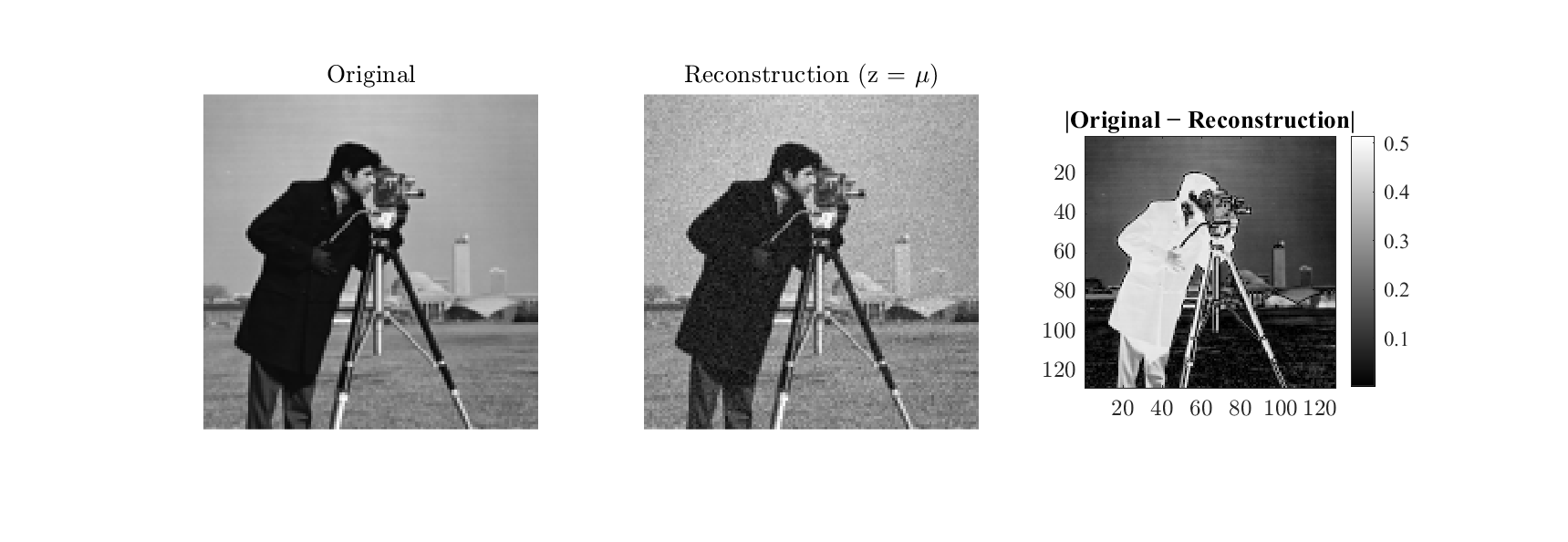

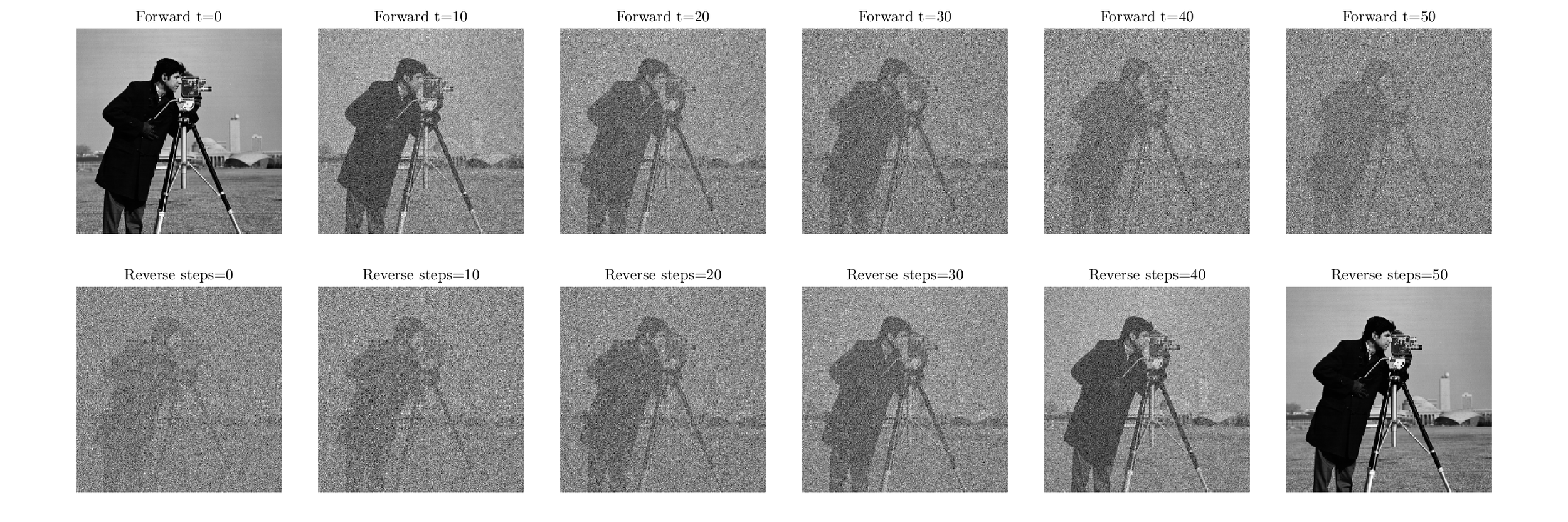

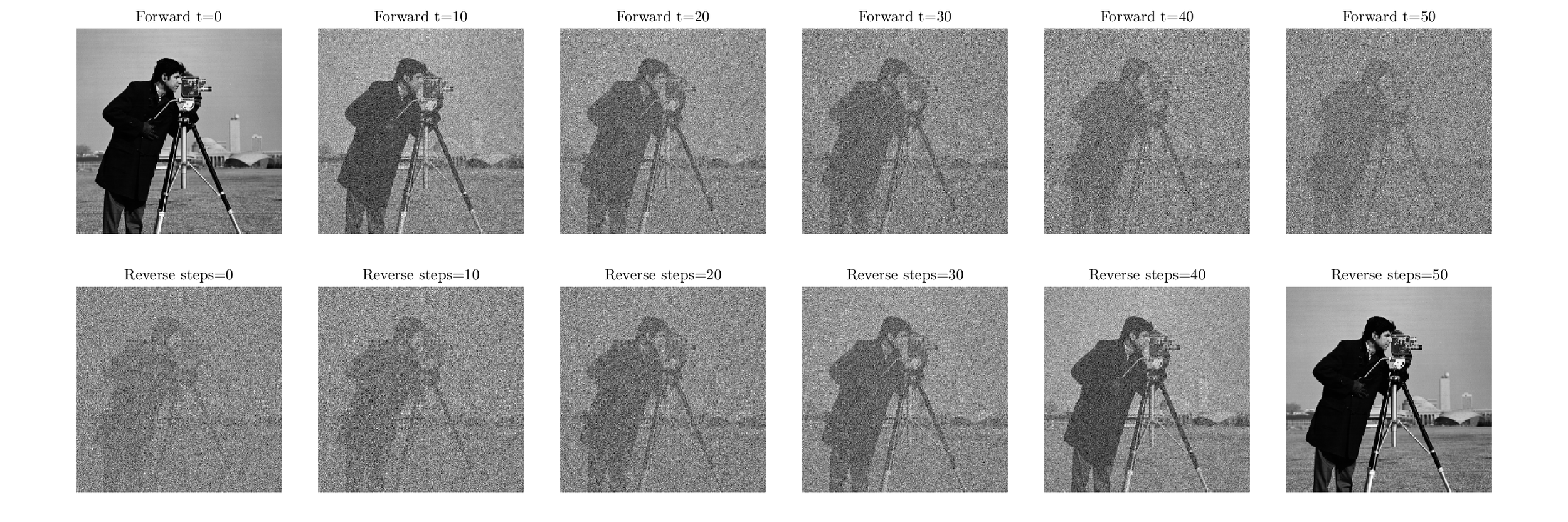

Generative models (Autoencoders, VAE, GAN, Diffusion, adversarial methods)

Reinforcement learning (Q-learning, policy gradients, DDPG, PPO, GRPO)

Advanced techniques (numerical differentiation, Adam, embeddings, LoRA, RLHF, MoE, distillation, MCMC, MCTS, RAG, model improvements)

Why this toolbox is different

1) Algorithm & code are line-for-line aligned

Each implementation is designed so that:

every line of code traces to a step in pseudocode

every pseudocode step traces to an equation or objective

the result is inspectable, modifiable, and teachable

You’re not learning what to call. You’re learning how it works.

2) Intuition is engineered, not hand-waved

The toolbox is built to support the “intuition-first” philosophy:

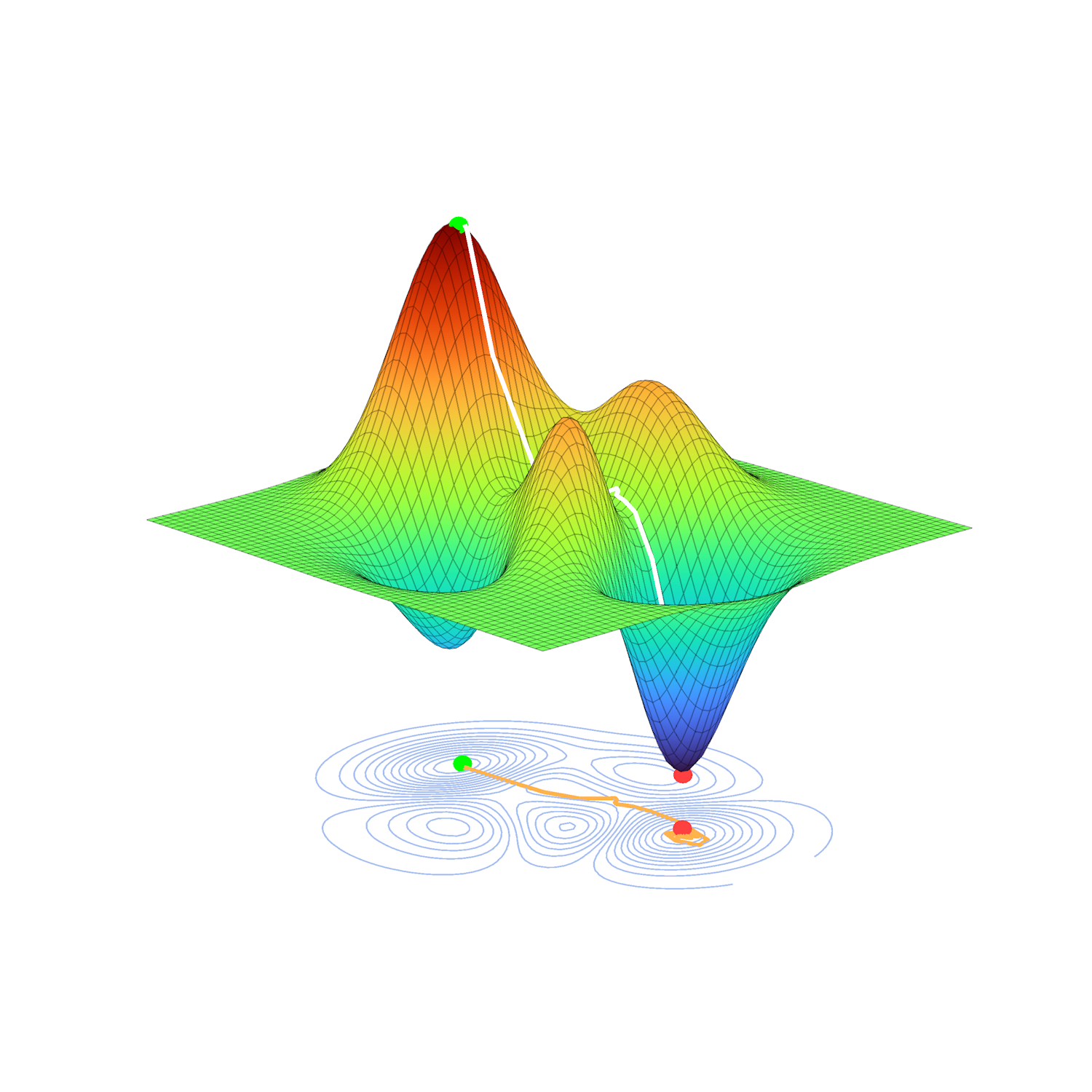

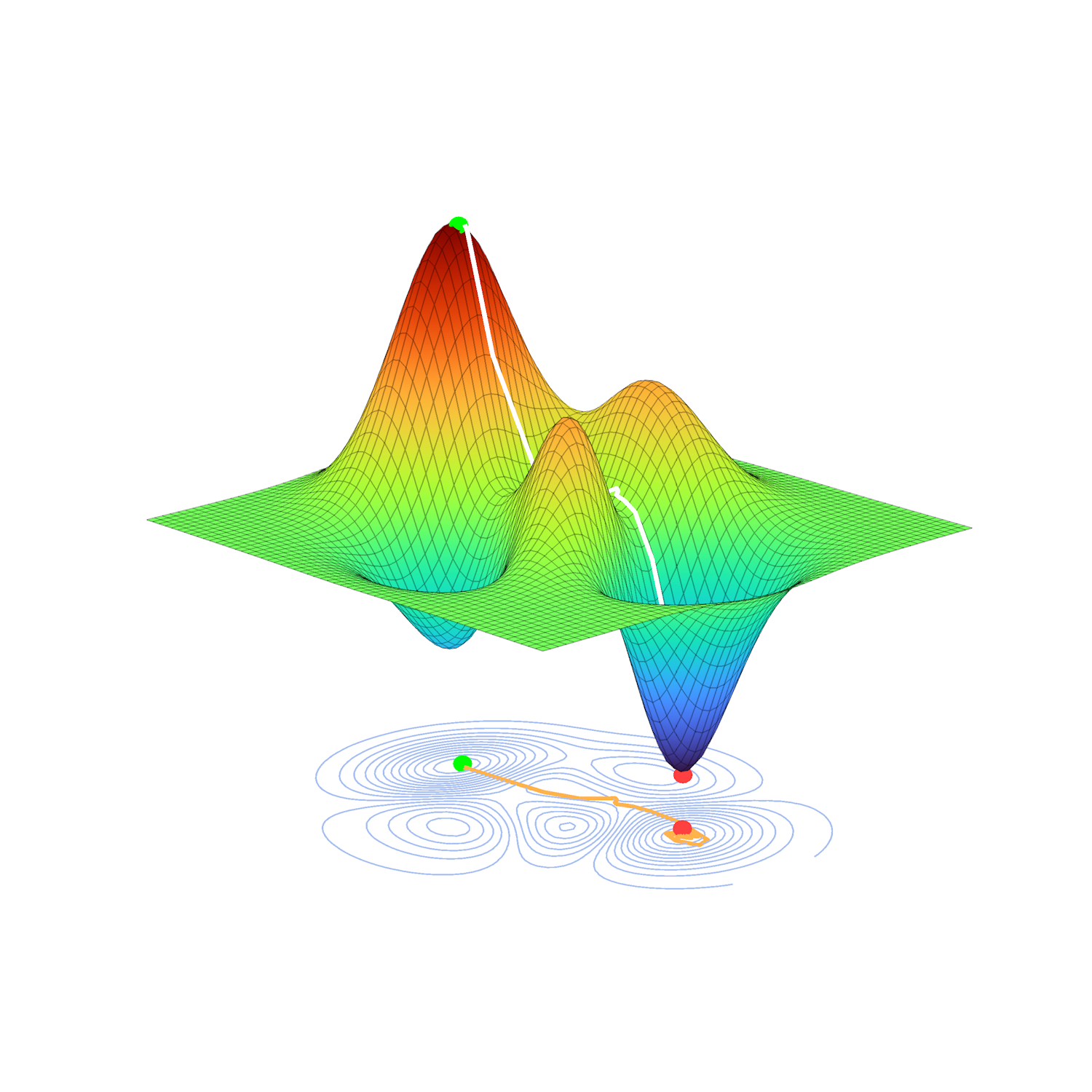

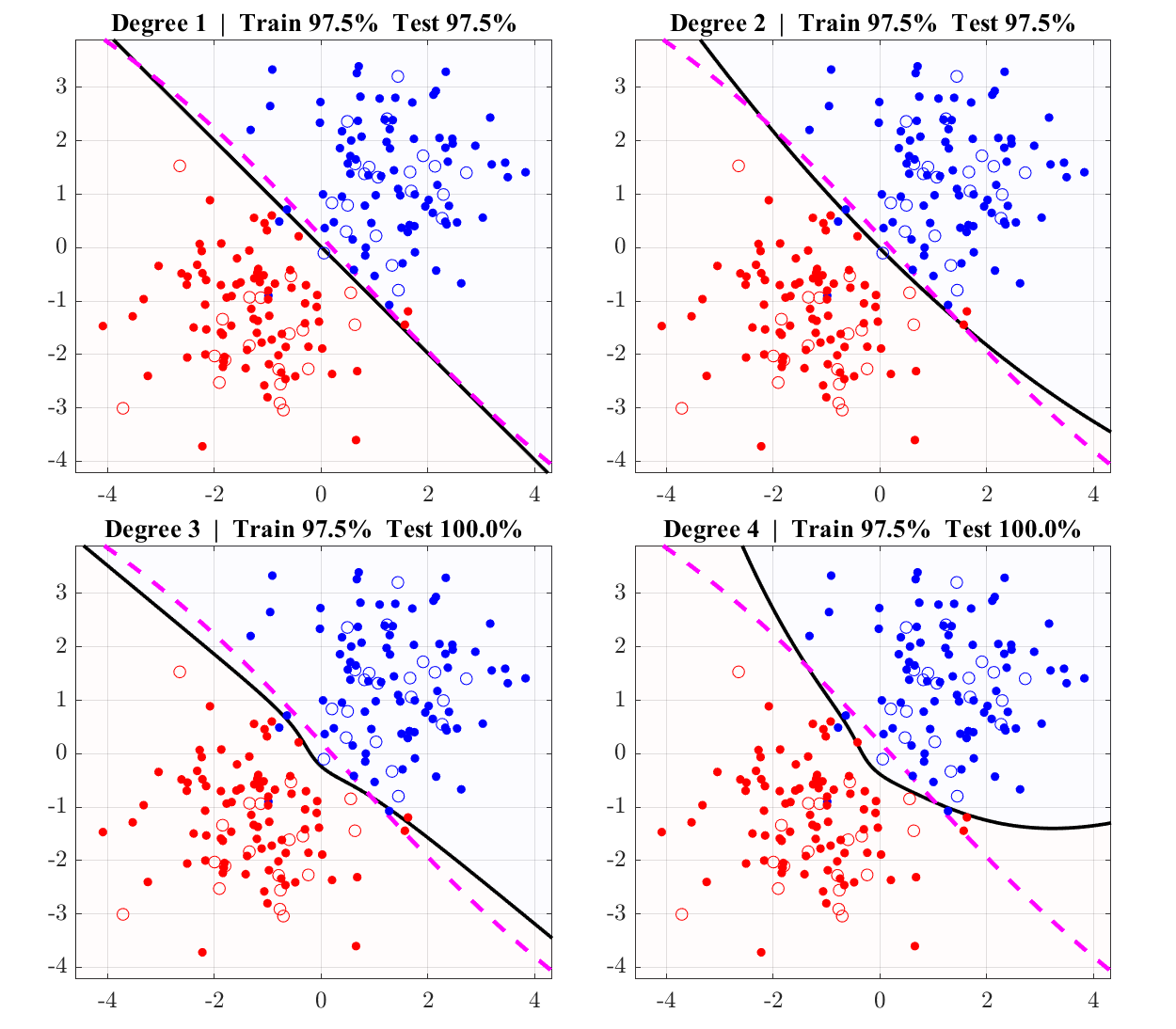

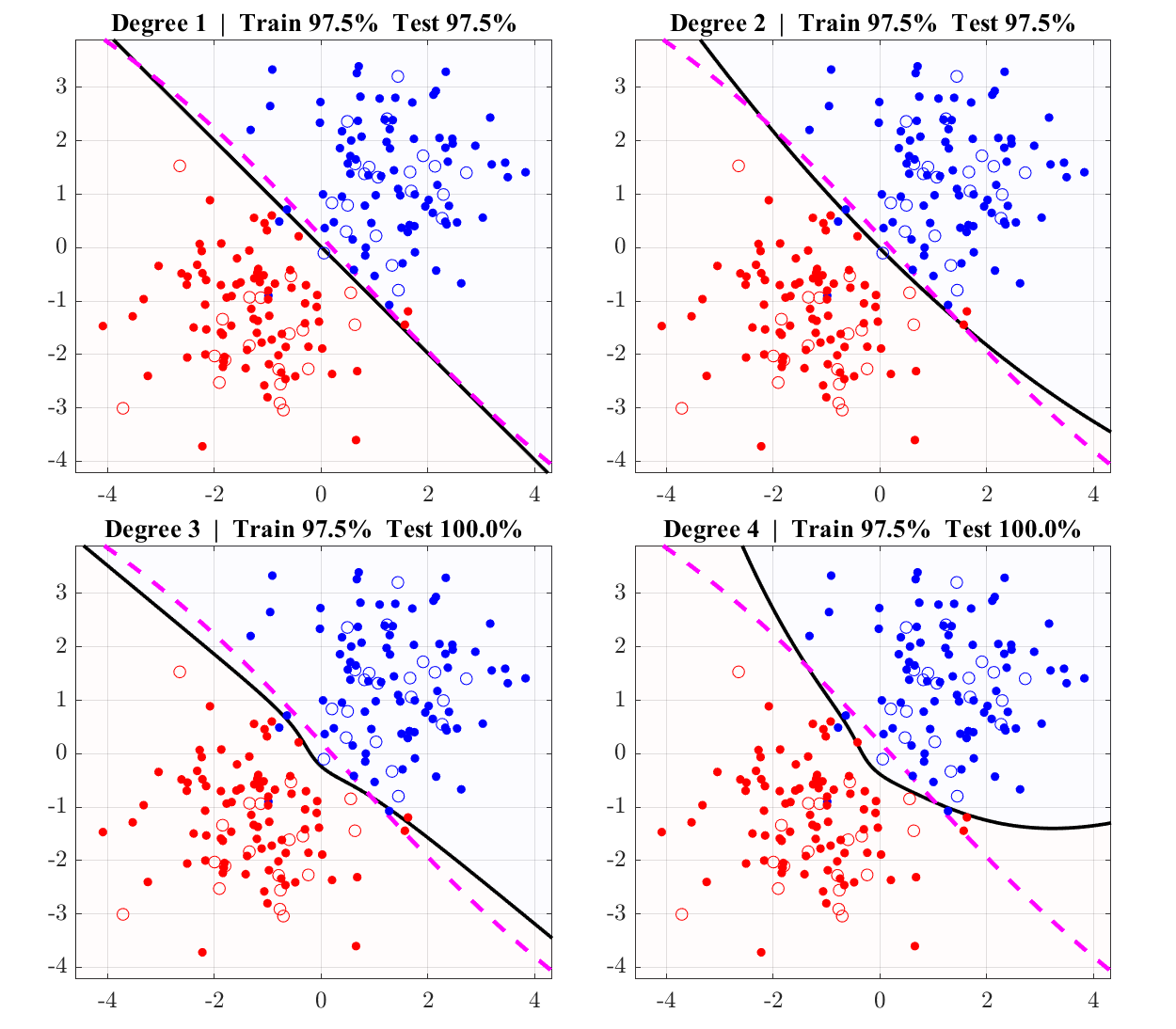

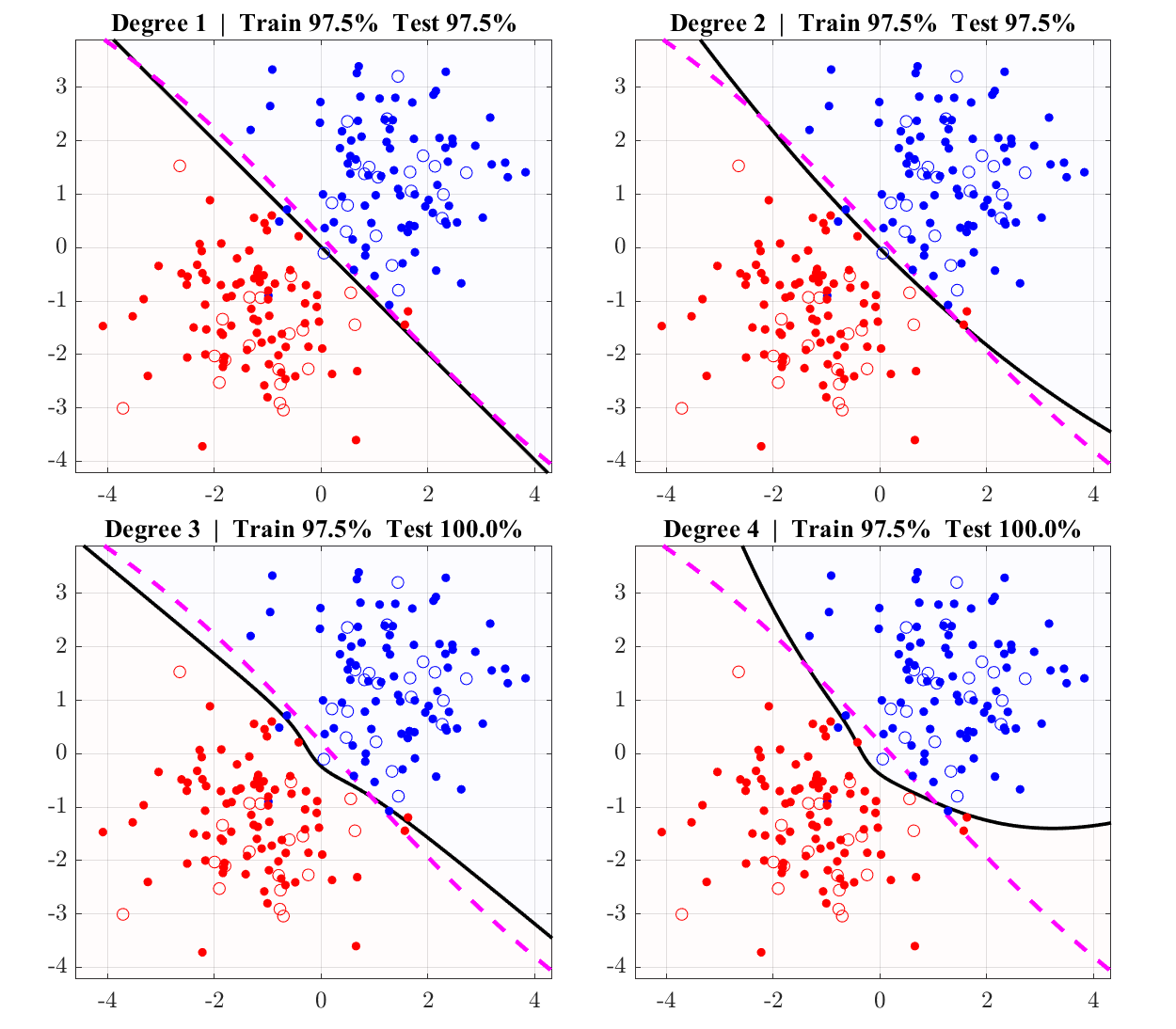

parameter sweeps that show how behavior changes

plots that expose failure modes and edge cases

datasets and metrics that make performance measurable

3) MATLAB / Python / R parity

The book uses MATLAB for compactness, but the toolbox provides parity across MATLAB, Python, and R so you can:

learn in MATLAB and deploy in Python

teach across environments

verify that results match across languages

4) Consistent notation end-to-end

A major cause of confusion in ML is drifting notation across chapters and sources.

This toolbox follows the same convention as the book: symbols introduced in intuition show up unchanged in algorithms, code, and math—so you build one mental model and reuse it everywhere.

Who it’s for

Beginners who want intuition that sticks (graphs first, then code, then math)

Practitioners who need implementations they can benchmark, profile, and adapt

Researchers who want to modify objectives/updates and re-implement correctly

Educators who want teachable code that doesn’t hide the mechanics

Typical use cases

Build “from scratch” versions to understand what libraries are doing

Validate assumptions and failure modes before production deployment

Prototype new variants of standard methods by modifying objectives/updates

Create assignments, labs, and course modules with runnable reference implementations

Cross-check results across MATLAB/Python/R to eliminate implementation drift

What this is not

This is not a tour of APIs.

It is not a math refresher.

It is a deliberately structured system that teaches you to translate fluently among:

equations → algorithms → code ↔ intuition

That fluency is what enables real engineering: debugging, extending, and trusting ML systems.

Companion to the textbook

The Roysdon AI Toolbox is designed to be used chapter-by-chapter alongside the book.

By the end of each chapter, you will be able to:

sketch behavior and failure modes from intuition alone

implement the algorithm from scratch (without black-box libraries)

derive or verify the key equations that justify the implementation

translate among equations, algorithms, code, and intuition fluently

Pricing & Licensing

Individual License: $100 (one-time)

For independent engineers, researchers, and educators

Full access to selected toolboxes

Non-commercial use

Local execution only

Source access for learning and experimentation

Restrictions

No support (training support can be negotiated)

No resale, redistribution, or commercial deployment

No SaaS, hosted services, or client deliverables

Typical Buyers

PhD students, faculty, hobbyists, professionals, and educators

Licensing

See License terms here.

Overview

268 From-Scratch Implementations in MATLAB, Python, and R — Companion to the Textbook

Visit this page for more details, better yet, get the book!

Build machine learning from first principles — without black boxes

Most people learn machine learning in one of two ways: they read equations in papers, or they call libraries in code. What’s missing is the bridge. The Roysdon AI Toolbox is the companion software suite to A Comprehensive Approach to Data Science, Machine Learning and AI. It contains 268 runnable implementations across MATLAB, Python, and R, designed to close the gap between:

Equations → Algorithms (turn objectives and update rules into step-by-step procedures)

Algorithms → Code (map each step into correct, minimal implementations)

Code → Intuition (see how knobs, failure modes, and data geometry actually change behavior)

This toolbox is built for readers who want more than API fluency. It is for engineers, researchers, and practitioners who want mechanical understanding—the kind that lets you debug, modify, extend, and trust what you deploy.

What you get

A complete “from-scratch” companion to a full ML curriculum

The toolbox mirrors the book’s end-to-end learning path across 57 chapters, spanning:

Preliminaries & foundations (probability, optimization, linear algebra, feature engineering)

Dimension reduction (PCA, SVD, manifold methods, LDA)

Unsupervised learning (k-Means, KDE, DBSCAN, EM/GMMs)

Supervised learning

Classification (trees, forests, boosting, logistic regression, SVMs, KNN, Naive Bayes)

Regression (linear regression plus optimization-based methods like LP/MILP and graph/network optimization)

Optimal filtering & estimation (MLE, EKF, and advanced estimator architectures)

Deep learning

Foundations (vanilla neural networks)

Sequence models (RNN, LSTM, Transformers)

Graphical models (CNN, GCN)

Generative models (Autoencoders, VAE, GAN, Diffusion, adversarial methods)

Reinforcement learning (Q-learning, policy gradients, DDPG, PPO, GRPO)

Advanced techniques (numerical differentiation, Adam, embeddings, LoRA, RLHF, MoE, distillation, MCMC, MCTS, RAG, model improvements)

Why this toolbox is different

1) Algorithm & code are line-for-line aligned

Each implementation is designed so that:

every line of code traces to a step in pseudocode

every pseudocode step traces to an equation or objective

the result is inspectable, modifiable, and teachable

You’re not learning what to call. You’re learning how it works.

2) Intuition is engineered, not hand-waved

The toolbox is built to support the “intuition-first” philosophy:

parameter sweeps that show how behavior changes

plots that expose failure modes and edge cases

datasets and metrics that make performance measurable

3) MATLAB / Python / R parity

The book uses MATLAB for compactness, but the toolbox provides parity across MATLAB, Python, and R so you can:

learn in MATLAB and deploy in Python

teach across environments

verify that results match across languages

4) Consistent notation end-to-end

A major cause of confusion in ML is drifting notation across chapters and sources.

This toolbox follows the same convention as the book: symbols introduced in intuition show up unchanged in algorithms, code, and math—so you build one mental model and reuse it everywhere.

Who it’s for

Beginners who want intuition that sticks (graphs first, then code, then math)

Practitioners who need implementations they can benchmark, profile, and adapt

Researchers who want to modify objectives/updates and re-implement correctly

Educators who want teachable code that doesn’t hide the mechanics

Typical use cases

Build “from scratch” versions to understand what libraries are doing

Validate assumptions and failure modes before production deployment

Prototype new variants of standard methods by modifying objectives/updates

Create assignments, labs, and course modules with runnable reference implementations

Cross-check results across MATLAB/Python/R to eliminate implementation drift

What this is not

This is not a tour of APIs.

It is not a math refresher.

It is a deliberately structured system that teaches you to translate fluently among:

equations → algorithms → code ↔ intuition

That fluency is what enables real engineering: debugging, extending, and trusting ML systems.

Companion to the textbook

The Roysdon AI Toolbox is designed to be used chapter-by-chapter alongside the book.

By the end of each chapter, you will be able to:

sketch behavior and failure modes from intuition alone

implement the algorithm from scratch (without black-box libraries)

derive or verify the key equations that justify the implementation

translate among equations, algorithms, code, and intuition fluently

Pricing & Licensing

Individual License: $100 (one-time)

For independent engineers, researchers, and educators

Full access to selected toolboxes

Non-commercial use

Local execution only

Source access for learning and experimentation

Restrictions

No support (training support can be negotiated)

No resale, redistribution, or commercial deployment

No SaaS, hosted services, or client deliverables

Typical Buyers

PhD students, faculty, hobbyists, professionals, and educators

Licensing

See License terms here.